开发AI微型SaaS往往需要搜集网上的信息,下面介绍一些比较标志性的,平时会很容易需要用到的搜集信息(爬虫)的方法,参考代码有:

1, AI-Content-Ideas-Generator-Prototype

自动化打开浏览器,读取文章主要部分,AI进行总结

https://github.com/hassancs91/AI-Content-Ideas-Generator-Prototype

chrome_options = Options()

chrome_options.add_argument("--headless")

chrome_options.add_experimental_option("excludeSwitches", ["enable-automation"])

chrome_options.add_experimental_option("useAutomationExtension", False)

driver = webdriver.Chrome(options=chrome_options)

stealth(

driver,

languages=["en-US", "en"],

vendor="Google Inc.",

platform="Win32",

webgl_vendor="Intel Inc.",

renderer="Intel Iris OpenGL Engine",

fix_hairline=True,

)

“Selenium Stealth” 是一个用于 Selenium 框架的工具或技术,旨在帮助用户更隐秘地自动化浏览器操作,以避免被网站检测到并阻止。在网络爬取、自动化测试和其他需要模拟用户操作的情况下,有时候需要避免被网站检测到使用自动化工具而采取措施限制访问。

def get_article_from_url(url):

try:

# Scrape the web page for content using newspaper

article = newspaper.Article(url)

# Download the article's content with a timeout of 10 seconds

article.download()

# Check if the download was successful before parsing the article

if article.download_state == 2:

article.parse()

# Get the main text content of the article

article_text = article.text

return article_text

else:

print("Error: Unable to download article from URL:", url)

return None

except Exception as e:

print("An error occurred while processing the URL:", url)

print(str(e))

return None

newspaper3k 是一个流行的 Python 库,用于从新闻网站和文章中提取内容,如文章文本、标题、作者、发布日期、图片等。这个库可以让用户轻松地从在线新闻源中提取信息,方便进行数据分析、自然语言处理等任务。

–

2, auto_job__find__chatgpt__rpa

RPA 自动化打开浏览器,执行动作,获得信息推送给 gpt Assistants api ,自动化获得见解和操作处理

https://github.com/Frrrrrrrrank/auto_job__find__chatgpt__rpa

driver = finding_jobs.get_driver()

# 更改下拉列表选项

finding_jobs.select_dropdown_option(driver, label)

# 调用 finding_jobs.py 中的函数来获取描述

job_description = finding_jobs.get_job_description_by_index(job_index)

if job_description:

element = driver.find_element(By.CSS_SELECTOR, '.op-btn.op-btn-chat').text

print(element)

if element == '立即沟通':

# 发送描述到聊天并打印响应

if should_use_langchain():

response = generate_letter(vectorstore, job_description)

else:

response = chat(job_description, assistant_id)

print(response)

time.sleep(1)

# 点击沟通按钮

contact_button = driver.find_element(By.XPATH, "//*[@id='wrap']/div[2]/div[2]/div/div/div[2]/div/div[1]/div[2]/a[2]")

contact_button.click()

# 等待回复框出现

xpath_locator_chat_box = "//*[@id='chat-input']"

chat_box = WebDriverWait(driver, 50).until(

EC.presence_of_element_located((By.XPATH, xpath_locator_chat_box))

)

# 调用函数发送响应

send_response_and_go_back(driver, response)

RPA调动浏览器,获得信息和操作动作。

file = client.files.create(file=open("my_cover.pdf", "rb"),

purpose='assistants')

assistant = client.beta.assistants.create(

# Getting assistant prompt from "prompts.py" file, edit on left panel if you want to change the prompt

instructions=assistant_instructions,

model="gpt-3.5-turbo-1106",

tools=[

{

"type": "retrieval" # This adds the knowledge base as a tool

},

],

file_ids=[file.id])

利用gpt的 “retrieval”, 向量数据库存储和索引搜索内容。

client.beta.threads.messages.create(

thread_id=thread_id,

role="user",

content=user_input

)

# Start the Assistant Run

run = client.beta.threads.runs.create(

thread_id=thread_id,

assistant_id=assistant_id

)

利用gpt的 assistant api 可以作为“慢思考”,深度思考和自动分析,获得更好的分析结果。

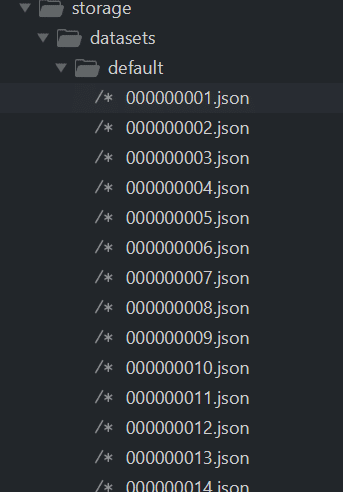

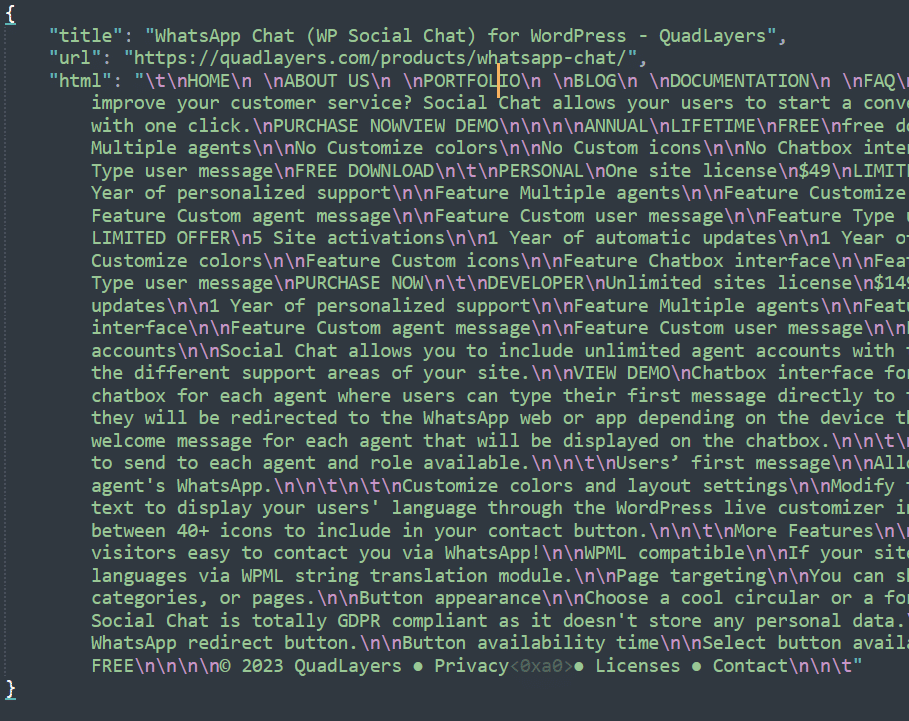

3 , gpt-crawler

自动化打开浏览器,深度爬虫,把文章都爬下来,获得文章数据

https://github.com/BuilderIO/gpt-crawler

一个命令执行则可以把网站内容多页面自动爬下来,这对于AI开发来说往往是非常有用。

还有一个类似的: https://github.com/mendableai/firecrawl

4, laravel-site-search

laravel包,php深度爬虫,全站信息爬取,导入到meilisearch,可以马上搜索使用

https://github.com/spatie/laravel-site-search

当向量数据库不适合的时候,可以试试用搜索的方法得到相关内容,同时提供了一键深度多页面整站爬下来,那么就很多时候适合AI开发使用。

5, RapidAPI 和 serpapi

这2个都可以利用api获得搜索引擎,大型网站等丰富信息,是一个不错的方案。

apify和bright也有很多数据卖,https://js.langchain.com/v0.1/docs/integrations/document_loaders/web_loaders/apify_dataset/

6. https://jina.ai/reader/

访问链接,则得到页面主题信息,这对于微型SaaS来说,很多时候很有用,特别是LLM:

测试demo:

https://r.jina.ai/https://stackoverflow.com/questions/45802404/failed-to-execute-setattribute-on-element-is-not-a-valid-attribute-name

7。firecrawl

https://github.com/mendableai/firecrawl

Turn entire websites into LLM-ready markdown or structured data. Scrape, crawl, search and extract with a single API.